Author: Floyd Smith of F5

Original link: 10 tips to achieve 10 times application performance improvement

Reproduced source: NGINX official website

Web application performance optimization is imminent. The share of economic activity online continues to grow, with the developed world’s Internet economy already accounting for more than 5% of the economy (see below for sources of Internet statistics). In the always-on, hyper-connected modern world, user expectations have changed dramatically. If your website doesn’t respond immediately, or if your app doesn’t work without delay, users will turn to your competitors.

For example, a study by Amazon nearly 10 years ago proved that every 100 milliseconds reduction in page load time resulted in a 1% increase in revenue—and that was true 10 years ago, let alone now. Another recent study also highlighted that more than half of surveyed website owners said they had lost revenue or customers due to poor application performance.

How fast is a website? For every second that a page takes longer to load, about 4% of users drop away. The first interaction time for major e-commerce sites ranges from 1 second to 3 seconds, which is the range with the highest conversion rates. The importance of web application performance can thus be seen, and will likely increase day by day.

It’s easy to improve performance, the hard part is how to see the results. To that end, this article presents 10 tips that can help you achieve a 10x improvement in website performance. This article is the first in a series of blog posts detailing how to improve application performance with some tried and tested optimization techniques and some support from NGINX. The series also outlines the security improvements you might get as a result.

Tip 1 – Use a reverse proxy server to speed up and secure applications

If your web application is running on one machine, it is very simple to improve its performance: change to a faster machine, add a few more processors, increase RAM, replace it with a high-speed disk array, so that the new machine can Run WordPress servers, Node.js apps, and Java apps faster. (If your application needs to access a database server, the solution is still simple: find two faster machines and connect them with a faster network.)

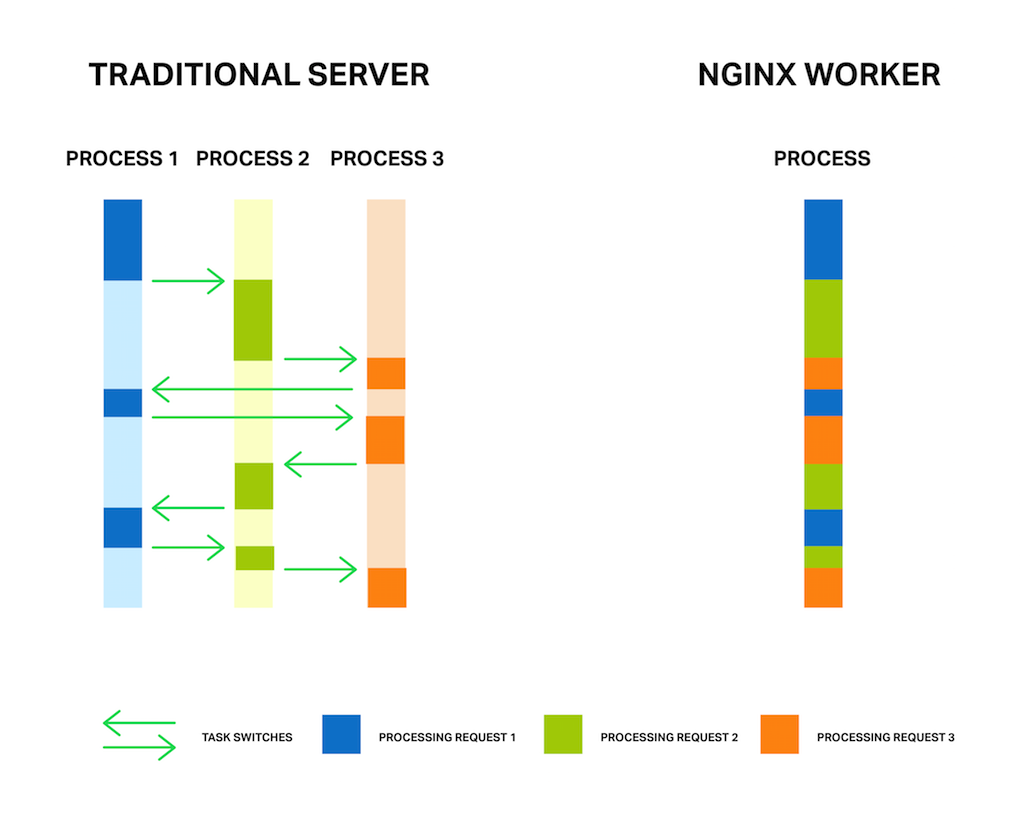

The problem is, the speed of the machine may not be the problem. Web applications often run slowly as the computer switches between different types of tasks: interacting with users over thousands of connections, accessing disk files, running application code, and so on. Application servers can crash because of this – running out of memory, swapping blocks of memory to disk, having many requests waiting for one task (like disk I/O), etc.

Instead of upgrading your hardware, you can take a completely different approach: add a reverse proxy server to offload some tasks. A reverse proxy server sits in front of the machine running the application and handles Internet traffic. Only the reverse proxy server is directly connected to the Internet, and the communication with the application server is carried out through a high-speed intranet.

The reverse proxy server can save the application server from waiting for the interaction between the user and the Web application, but focus on building the page, and the remaining sending tasks are performed by the reverse proxy. Without having to wait for a client response, the application server can run at speeds close to those achieved by optimized benchmarks.

Adding a reverse proxy server can also add flexibility to your web server setup. For example, if a server performing a given task becomes overloaded, you can easily add another server of the same type; if a server goes down, it’s not difficult to replace it.

Given this flexibility, a reverse proxy server is often also a prerequisite for many other performance optimizations, such as:

- load balancing(See tip 2) – Run a load balancer on the reverse proxy server to share traffic evenly among multiple application servers. With a load balancer, you can add application servers without changing your application.

- cache static files(See Tip 3) – Directly requested files (such as image files or code files) can be stored on the reverse proxy server and sent directly to the client, which not only serves assets faster, but also relieves the application server of burden, thereby speeding up the application.

- Secure your site—— The reverse proxy server can be configured with high security and monitored to quickly identify and respond to attacks and protect the security of application servers.

NGINX software was designed from the beginning as a reverse proxy server with the above additional functions. NGINX uses an event-driven approach to processing, which makes it more efficient than traditional servers. NGINX Plus adds more advanced reverse proxy features, such as application health checking, routing of specific requests, advanced caching, and technical support.

Tip 2 – Adding a Load Balancer

Adding a load balancer is relatively simple, but can significantly improve site performance and security. You don’t need to upgrade the hardware performance or size of the core web server, but directly use the load balancer to distribute the traffic to multiple servers. Even if an application is poorly written or difficult to scale, a load balancer can improve the user experience without making any changes to the application.

A load balancer is first and foremost a reverse proxy server (see tip 1) responsible for receiving internet traffic and forwarding requests to other servers. The key here is that the load balancing server can support two or more application servers, using an algorithm of your choice to distribute requests among the different servers. The simplest method of load balancing is round robin, where each new request is sent to the next server in the list. Sending requests to the server with the fewest number of active connections is also one of the common methods. NGINX Plus also features session persistence, which keeps multiple sessions of the current user on the same server.

A load balancer can significantly improve performance by avoiding situations where one server is overloaded while others are idle. Plus, web server scaling is easy, as you can add relatively cheap servers and be sure to get the most out of them.

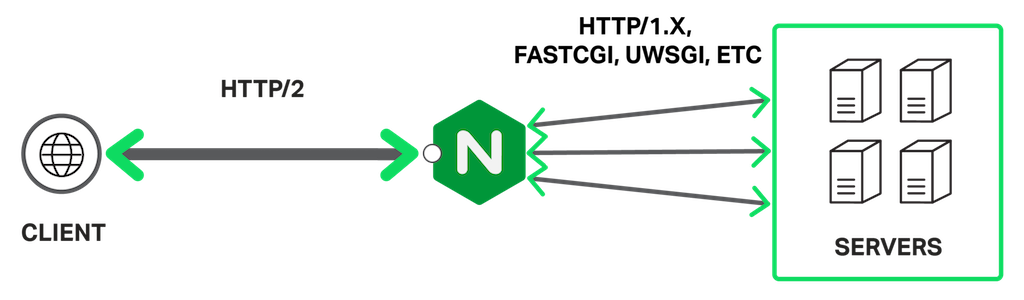

Protocols that can be load balanced include HTTP, HTTPS, SPDY, HTTP/2, WebSocket, FastCGI, SCGI, uwsgi, memcached, and other application types, including TCP-based applications and other four-layer protocols. You should analyze your web application to determine its type and what are its performance weaknesses.

One or several servers used for load balancing can also handle other tasks at the same time, such as SSL termination, selective use of HTTP/1._x_ and HTTP/2 by client, static file caching, etc.

Load balancing is one of the commonly used functions of NGINX. For more information, download our eBook: Five Reasons to Choose a Software Load Balancer; for basic configuration instructions, see Load Balancing with NGINX and NGINX Plus: Part 1; for full documentation, see NGINX Plus Administrator’s Guide. NGINX Plus is our commercial product that supports more specialized load balancing functions, such as load routing based on server response time and Microsoft NTLM protocol load balancing.

Tip 3 – Caching Static and Dynamic Content

Caching can improve the performance of web applications by delivering content to clients faster. Caching can employ multiple strategies: preprocessing content for fast delivery when needed, storing content on faster devices, storing content closer to the client, etc., can also be combined.

There are two types of caches:

- static content caching– Infrequently changing files such as image files (JPEG, PNG) and code files (CSS, JavaScript) can be stored on edge servers for quick retrieval from memory or disk.

- Dynamic Content Caching– Many web applications generate new HTML for each page request. By briefly caching a copy of the generated HTML, you can greatly reduce the total number of pages that must be generated while still providing enough fresh content to meet demand.

For example, assuming that a page is viewed 10 times per second, then caching for 1 second means that 90% of the requests for this page come from the cache. If you cache each piece of static content separately, then even a freshly generated page may come mostly from cached content.

There are three main techniques used to cache content generated by a web application:

- Put content closer to users– The closer to the user, the less transmission time.

- Put content on faster machines– The faster the machine, the faster the retrieval.

- Remove content from overused machines– Machines can sometimes run much slower than baseline performance while performing a particular task because they are busy with other tasks. Caching on separate machines can improve the performance of cached and non-cached resources because the host is less overloaded.

Web application caching can be implemented from the inside (web application server) to the outside. First, cache dynamic content to reduce the load on the application server. Second, cache static content (including temporary copies of dynamic content) to further reduce application server load. The cache is then offloaded from the application server to a machine that is faster and/or closer to the user, thereby offloading the application server and reducing retrieval and transfer times.

An optimized cache can greatly speed up your application. For many web pages, static data (such as large image files) often takes up more than half of the content. Retrieving and transmitting such data could take seconds without caching, but fractions of a second if the data is cached locally.

For the practical application of caching, we can look at such an example: NGINX and NGINX Plus use two instructions to set up caching:proxy_cache_pathandproxy_cache. You can specify the location and size of the cache, the maximum cache time for files, and other parameters.You can even use the directiveproxy_cache_use_stale(quite popular), instructs the cache to serve old files when the server that should be serving fresh content is too busy or down, after all, for the client, something is better than nothing. This can greatly improve your site or app’s uptime and give users a sense of stability.

NGINX Plus has advanced caching features, including support for cache purging, a dashboard that visualizes cache status (to monitor real-time activity), and more.

For more information on NGINX caching, see the reference documentation and the NGINX Plus Administrator’s Guide.

Notice: Caching crosses organizational boundaries between application developers, capital investment decision makers, and real-time network operators. Sophisticated caching strategies such as those mentioned in this article are a good example of the value of DevOps, where application developers, architects, and operators work together to ensure website functionality, response time, security, and business outcomes (such as completing transactions or sales) together.

Tip 4 – Compress Data

Compression is an important, potential performance accelerator. Files such as pictures (JPEG and PNG), video (MPEG-4), and music (MP3) all have well-crafted and very efficient compression standards, any of which can shrink files by an order of magnitude or more.

Text data such as HTML (both plain text and HTML tags), CSS, and code (such as JavaScript) are often transmitted without compression. Compressing this data can have a particularly noticeable impact on perceived web application performance, especially for clients with slow or limited mobile connections.

This is because text data is usually sufficient for users to interact with the page, while multimedia data may be more supportive or icing on the cake. Smart content compression can often reduce the bandwidth requirements of HTML, Javascript, CSS, and other text content by 30% or more, with a corresponding reduction in load times.

If using SSL, compression can reduce the amount of data that must be SSL-encoded, offsetting some of the CPU time needed to compress the data.

There are many ways to compress text data. For example, Tip 6 mentions a novel mechanism for compressing text in SPDY and HTTP/2 that is tuned specifically for request header data. Another example of text compression is that you can enable GZIP compression in NGINX.After precompressing the text data in the service, you can usegzip_staticThe directive provides compressed **.gz** files directly.

Tip 5 – Optimizing SSL/TLS

The Secure Sockets Layer (SSL) protocol and its upgrade, Transport Layer Security (TLS), are increasingly used on websites. SSL/TLS increases site security by encrypting data transmitted from the origin server to the user. Google now uses the use of SSL/TLS as a bonus for search engine rankings, which has accelerated the popularity of this technology to some extent.

While SSL/TLS has grown in popularity, many websites have been plagued by their associated performance issues. SSL/TLS slows down websites for two reasons:

- The establishment of encryption keys requires an initial handshake whenever a new connection is opened. This problem is exacerbated by the way browsers use HTTP/1._x_ to make multiple connections to each server.

- Server-side encrypted data and client-side decrypted data also incur ongoing overhead.

To encourage people to use SSL/TLS, the HTTP/2 and SPDY protocols (see tip 6) came into being, so that browsers only need to establish one connection per session, and at the same time get rid of a big source of SSL’s overhead. However, applications delivered over SSL/TLS still have a lot of room for performance improvement.

The optimization mechanism for SSL/TLS varies from web server to web server. For example, NGINX uses OpenSSL running on standard commodity hardware to provide performance comparable to dedicated hardware solutions. NGINX’s SSL performance optimizations are well documented to minimize the time and CPU consumption to perform SSL/TLS encryption and decryption.

Additionally, this blog post details other ways to improve SSL/TLS performance, in short, these techniques are:

- session cache— use

ssl_session_cacheThe directive cache protects the parameters used on each new SSL/STL connection. - Session Ticket or ID– Store the information of a specific SSL/TLS session in a ticket or ID, so that the connection can be reused smoothly without another handshake.

- OCSP Stapling– Reduce handshake time by caching SSL/TLS certificate information.

NGINX and NGINX PLUS support SSL/TLS termination, that is, clear text communication with other servers while handling encryption and decryption of client traffic. To set up SSL/TLS termination in NGINX or NGINX Plus, see the instructions for HTTPS connections and TCP encrypted connections.

Tip 6 – Implement HTTP/2 or SPDY

For sites already using SSL/TLS, HTTP/2 and SPDY may give performance a boost since only one handshake is required for a connection. For sites not already using SSL/TLS, HTTP/2 and SPDY, moving to SSL/TLS (often with a performance hit) is a wash from a responsiveness standpoint.

Google launched SPDY in 2012 as an effort to achieve faster speeds on top of HTTP/1._x_. HTTP/2 is a SPDY-based standard recently approved by the IETF. SPDY is widely supported, but will soon be replaced by HTTP/2.

A key feature of SPDY and HTTP/2 is the use of a single connection rather than multiple connections. A single connection is multiplexed, so it can carry multiple requests and responses simultaneously.

These protocols avoid the overhead of setting up and managing multiple connections (necessary for browsers to implement HTTP/1._x_) by making good use of one connection. Using a single connection is especially useful for SSL because it minimizes the handshake time required by SSL/TLS to establish a secure connection.

The SPDY protocol requires the use of SSL/TLS; HTTP/2 does not officially require this, but all browsers currently supporting HTTP/2 will only use it if SSL/TLS is enabled. That is, a browser that supports HTTP/2 can only use HTTP/2 if the website uses SSL and its server accepts HTTP/2 traffic, otherwise the browser communicates over HTTP/1._x_.

After implementing SPDY or HTTP/2, typical HTTP performance optimization techniques such as domain sharding, resource consolidation, and image spriting are eliminated. These changes also simplify code and deployment management. To learn more about the changes HTTP/2 brings, see our white paper: HTTP/2 for Web Application Developers.

In terms of support for these protocols, NGINX has supported SPDY for a long time, and most sites using SPDY now have NGINX deployed. NGINX is also one of the first pioneers to support HTTP/2. Since September 2015, NGINX open source version and NGINX Plus have introduced support for HTTP/2.

NGINX expects that over time, most sites will fully enable SSL and migrate to HTTP/2. Not only does this help with security, but as new optimization techniques are discovered and implemented, more performant code will be further simplified.

Tip 7 – Update the software version

An easy way to improve application performance is to choose components for your software stack based on stability and performance. Also, since the developers of high-quality components may be constantly enhancing performance and fixing bugs, it is very cost-effective to use the latest stable version of the software. The new version will receive more attention from the developer and user communities, and will also take advantage of new compiler optimization techniques, including tuning for new hardware.

New stable releases are also usually better than older releases from a compatibility and performance standpoint. Sticking to software updates also keeps you on top of tuning, bug fixes, and security alerts.

Using an older version will prevent new features from being available. For example, HTTP/2 mentioned above currently requires OpenSSL 1.0.1. Beginning mid-2016, HTTP/2 will require the use of OpenSSL 1.0.2 (released January 2015).

NGINX users can start by upgrading to the latest version of NGINX or NGINX Plus, which have new features such as socket sharding and thread pooling (see tip 9), and are continuously tuned for performance. Then take a deeper look at the software in the stack and try to migrate to the latest version.

Tip 8 – Optimizing Linux Performance

Linux is the underlying operating system for most web servers today. As a cornerstone of infrastructure, Linux holds significant tuning potential. By default, many Linux systems are conservatively tuned for low-resource, typical desktop workloads. This means that in web application use cases, tuning is essential to achieve maximum performance.

Linux optimizations vary by web server. Taking NGINX as an example, you can focus on speeding up Linux from the following aspects:

- Backlog Queue—— If some connections cannot be processed, consider increasing the

net.core.somaxconnThe value of , which is the maximum number of connections waiting to be processed by NGINX. If the current connection limit is too small, you will receive an error message, you can gradually increase this parameter until the error message no longer appears. - file descriptor– NGINX uses a maximum of two file descriptors per connection.If the system is serving many connections, it may be necessary to increase the

sys.fs.file_max(a system-wide limit on file descriptors), andnofile(user file descriptor limit) to support increased load. - ephemeral port– When used as a proxy, NGINX creates ephemeral ports for each upstream server.you can use it

net.ipv4.ip_local_port_rangeWiden the port threshold to increase the number of available ports.You can also usenet.ipv4.tcp_fin_timeoutFaster turnaround by reducing the timeout before reusing an inactive port.

For NGINX, see the NGINX Performance Tuning Guide to learn how to optimize your Linux system to handle heavy network traffic with ease!

Tip 9 – Optimizing Web Server Performance

No matter what web server you use, it needs to be tuned to improve the performance of your web application. The following recommendations generally apply to any web server, with some settings specific to NGINX. The main optimization techniques are:

- access log—— Instead of writing the log of each request to disk immediately, you can put the entries in the memory buffer first, and then write them in batches.For NGINX, the

buffer=_size_parameter added toaccess_logIn the command, the log is written to disk after the memory buffer is full.if addflush=_time_parameter, the contents of the buffer are also written to disk after the specified time has elapsed. - buffer– Buffering is used to hold a portion of the response in memory until the buffer is full, enabling more efficient communication with the client. Responses that don’t fit in memory are written to disk, resulting in slower performance.With NGINX buffering enabled, you can use

proxy_buffer_sizeandproxy_buffersCommands are managed. - Client keepalive connection– Keepalive connections can reduce overhead, especially when using SSL/TLS.For NGINX, you can increase the number of

keepalive_requestsThe maximum number of (default is 100), while increasing thekeepalive_timeoutA value that keeps the keepalive connection open for a longer period of time, thereby speeding up subsequent requests. - Upstream keepalive connections—— Upstream connections, that is, connections to servers such as application servers and database servers, can also benefit from the setting of keepalive connections.For Upstream connections, you can add

keepalive, the number of idle keepalive connections kept open for each worker process. This increases reuse of connections, reducing the need to open entirely new connections. For more information, see our blog post: HTTP Keepalives and Web Performance. - Limiting– Limiting the resources used by clients can improve performance and security. For NGINX,

limit_connandlimit_conn_zonedirective can limit the number of connections from a given source,limit_ratebandwidth can be limited. These settings prevent legitimate users from “gobbling” resources and can also help prevent attacks.limit_reqandlimit_req_zoneDirectives can limit client requests.For connection to upstream server you can use in upstream configuration blockserverInstructivemax_connsparameter to limit connections to upstream servers, preventing overloading.AssociatedqueueThe instruction creates a queue and upon reaching themax_connsHolds the specified number of requests for the specified length of time after throttling. - Worker process– The Worker process is responsible for processing requests. NGINX uses an event-based model and an operating system-dependent mechanism to efficiently distribute requests among worker processes.It is recommended to

worker_processesThe value of is set to one per CPU.Most systems support raising theworker_connectionsThe maximum value for (default is 512), you can experiment to find the best value for your system. - Socket Sharding– The socket listener typically distributes new connections to all worker processes. Socket Sharding creates a socket listener for each worker process, and the kernel assigns a connection to the socket listener when it is available. This reduces lock contention and improves performance on multi-core systems.To enable socket sharding, use the

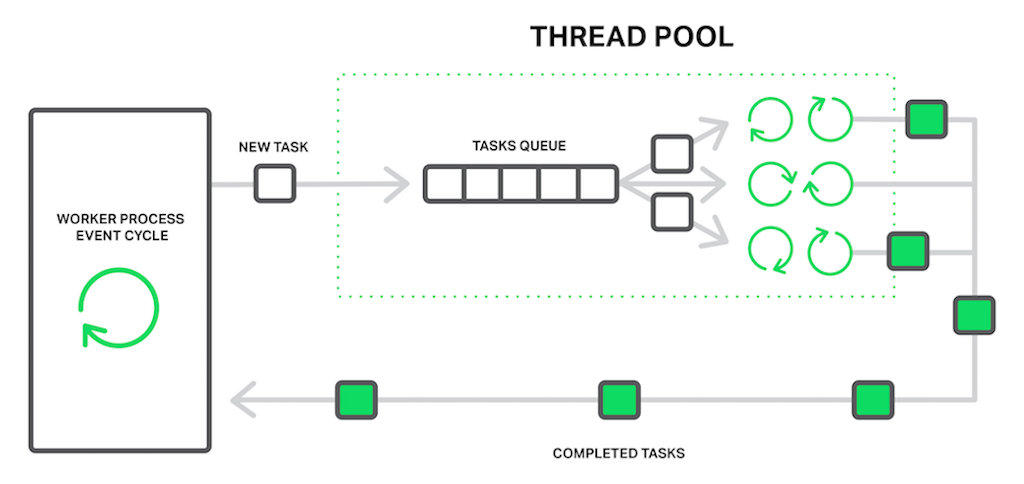

listenadd to the commandreuseportparameter. - Thread Pool– Any computer process can be slowed down by a slow operation. For Web server software, disk access can hinder many faster operations, such as in-memory calculations or information replication. When using a thread pool, slow operations are distributed to a separate set of tasks while the main processing loop continues to run faster operations. After the disk operation is complete, the results are returned to the main processing loop. NGINX will

read()system calls andsendfile()These two operations are offloaded to the thread pool.

tips: If you are changing any operating system or supporting service settings, change them one at a time and test performance. If a change causes problems or doesn’t speed up your site, change it back.

For more information on NGINX web server tuning, see our blog post: Optimizing NGINX Performance.

Tip 10 – Monitor real-time activity to troubleshoot issues and identify bottlenecks

The key to an efficient application development and delivery methodology is to closely monitor the real-world performance of the application in real time. You must monitor specific devices and activity within your web infrastructure.

Monitoring site activity is mostly reactive — it just tells you what’s going on, and it’s up to us to identify and fix problems.

Monitoring can catch several different kinds of problems:

- The server is down.

- The server is running erratically, causing the connection to drop.

- The server is experiencing a large number of cache misses.

- The server is not sending the correct content.

Global application performance monitoring tools such as New Relic or Dynatrace can help you monitor page load times remotely, and NGINX can help you monitor the application delivery side of things. Application performance data can show when your optimizations are having a real impact on users and when you need to scale your infrastructure to meet traffic demands.

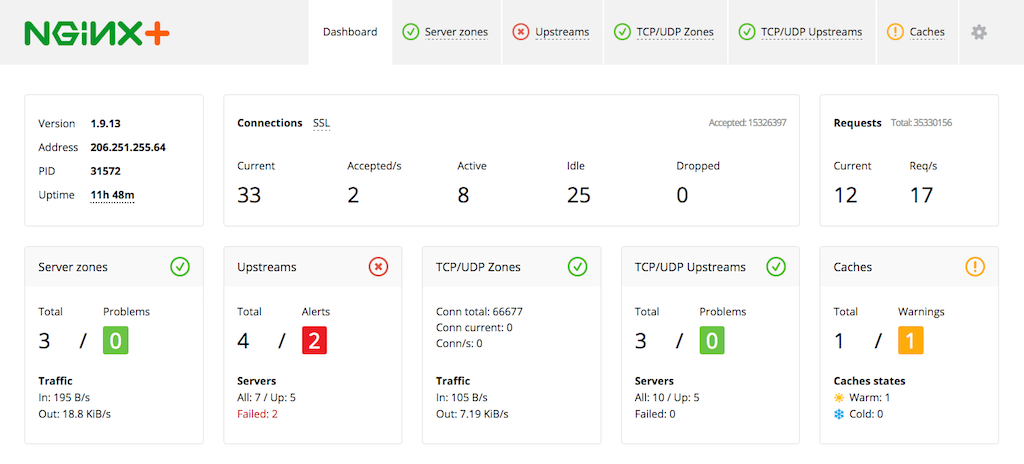

To help identify and resolve issues quickly, NGINX Plus adds application-aware health checks, synthetic transactions that repeat periodically to alert you when issues are found. NGINX Plus also features session draining, the ability to stop new connections when existing tasks complete, and its slow-start feature also allows recovered servers to ramp up to normal levels within a load-balancing group. When used properly, health checks help you catch issues before they have a major impact on user experience, and session exhaustion and slow start allow you to replace servers without degrading perceived performance or uptime. The figure below shows the NGINX Plus built-in real-time activity monitoring dashboard of the web infrastructure, covering servers, TCP connections, and caches.

Conclusion – Achieved 10x Performance Improvement

Different web applications have different room for performance improvement, and the actual effect depends on your budget, time invested and gaps in current implementation. So, how can you achieve a 10x performance improvement in your application?

To help you understand the potential impact of each optimization technique, here’s a list of some of the possible improvements that each technique described in this article might bring:

- Reverse proxy server and load balancing– No load balancing or poor load balancing may result in extremely low performance. Adding a reverse proxy server such as NGINX can prevent web applications from going back and forth between memory and disk. Load balancing can offload processing tasks from overloaded servers to available servers and also simplify scaling. These changes can significantly improve performance. Compared with the worst time of the original deployment method, it is very easy to improve the performance by 10 times. Even if it is less than 10 times, it is a qualitative leap in general.

- Caching dynamic and static content– If your web server is overloaded as an application server at the same time, caching dynamic content alone can achieve a 10x performance improvement at peak times, and caching static files can also achieve several times performance improvement.

- compressed data– Significant performance improvements with media file compression formats such as JPEG (photos), PNG (graphics), MPEG-4 (movies), and MP3 (music files). If these methods are used, then compressing the text data (code and HTML) can increase the speed of the initial page load by two times.

- Optimize SSL/TLS– The security handshake has a high impact on performance, so optimizing it may make the initial response twice as fast, especially for sites with heavy text content. If you are optimizing media file transfers under SSL/TLS, the effect of performance optimization may not be great.

- Implement HTTP/2 and SPDY– When used in conjunction with SSL/TLS, these protocols may bring incremental improvements to overall website performance.

- Linux and web server software (such as NGINX) tuning– Fixes such as optimizing buffering, using keepalive connections, and offloading time-consuming tasks to separate thread pools can significantly improve performance. For example, thread pools can speed up disk-intensive tasks by about an order of magnitude.

We hope you will give these techniques a try, and look forward to hearing your thoughts on improving app performance.

more resources

Want to get timely and comprehensive access to NGINX-related technical dry goods, interactive questions and answers, series of courses, and event resources?

Please go to the NGINX open source community:

#tips #achieve #times #application #performance #improvement #Personal #space #NGINX #open #source #community #News Fast Delivery