MindAudio is a full-scene AI framework MindSpore Established, open source all-in-one toolkit for the speech domain. It provides a series of APIs for common audio data processing, audio feature extraction, and audio data enhancement in the field of speech. Users can conveniently perform data preprocessing; it provides common data sets and SoTA models, and supports multiple speech processing tasks such as speech recognition, text to text, etc. Voice generation, voiceprint recognition, voice separation, etc.

main features

- Rich data processing API MindAudio provides a large number of easy-to-use data processing APIs, users can easily implement audio data analysis, and perform feature extraction and enhancement on data in audio algorithm tasks.

>>> import mindaudio # 读取音频文件 >>> test_data, sr = mindaudio.read(data_path) # 对原始数据进行变速 >>> matrix = mindaudio.speed_perturb(signal, orig_freq=16000, speeds=[90, 100])

- Integrate commonly used data sets and perform data preprocessing with one click Due to the large number of data sets in the field of audio deep learning, the processing process is complicated and unfriendly to novices. MindAudio provides a set of efficient data processing solutions for different data, and supports users to make customized modifications according to their needs.

>>> from ..librispeech import create_base_dataset, train_data_pipeline # 创建基础数据集 >>>ds_train = create_base_dataset(manifest_filepath,labels) # 进行数据特征提取 >>>ds_train = train_data_pipeline(ds_train, batch_size=64)

- Supports multiple mission models MindAudio provides a variety of task models, such as DeepSpeech2 in the ASR task, WavGrad in the TTS task, etc., and provides pre-trained weights, training strategies and performance reports to help users quickly get started with reproducing audio domain tasks.

- flexible and efficient MindAudio is developed based on the highly efficient deep learning framework MindSpore. It has features such as automatic parallelism and automatic differentiation, supports different hardware platforms (CPU/GPU/Ascend), and supports efficiency-optimized static image mode and flexible debugging dynamic image mode.

Audio Data Analysis

mindaudio provides a series of commonly used audio data processing APIs, which can be easily called for data analysis and feature extraction.

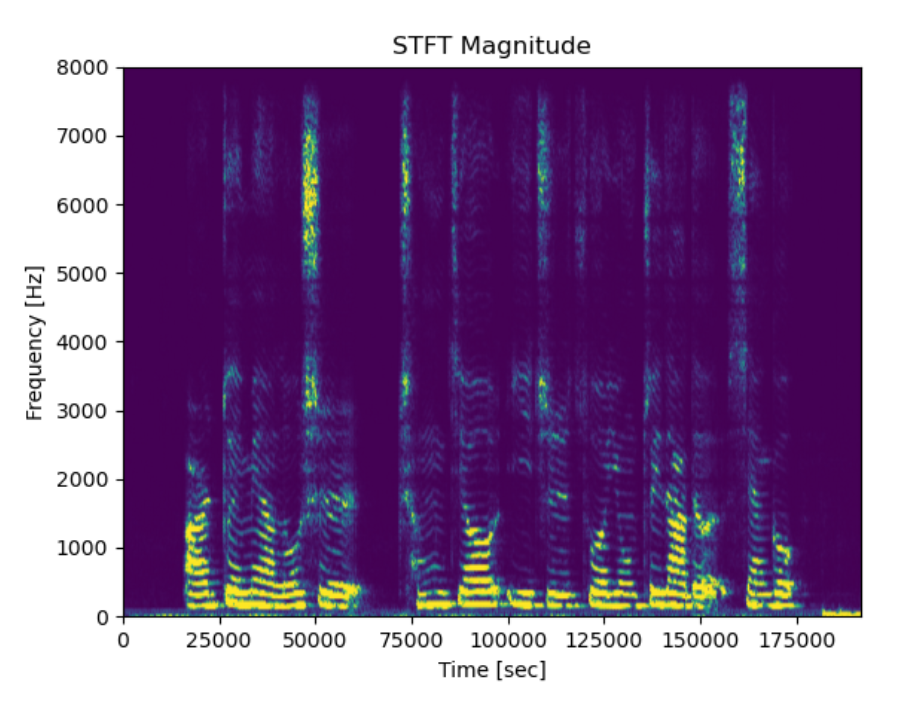

>>> import mindaudio >>> import numpy as np >>> import matplotlib.pyplot as plt # 读取音频文件 >>> test_data, sr = mindaudio.read(data_path) # 进行数据特征提取 >>> n_fft = 512 >>> matrix = mindaudio.stft(test_data, n_fft=n_fft) >>> magnitude, _ = mindaudio.magphase(matrix, 1) # 画图展示 >>> x = [i for i in range(0, 256*750, 256)] >>> f = [i/n_fft * sr for i in range(0, int(n_fft/2+1))] >>> plt.pcolormesh(x,f,magnitude, shading='gouraud', vmin=0, vmax=np.percentile(magnitude, 98)) >>> plt.title('STFT Magnitude') >>> plt.ylabel('Frequency [Hz]') >>> plt.xlabel('Time [sec]') >>> plt.show()

The result display:

#MindAudio #Homepage #Documentation #Downloads #Open #Source #Integrated #Toolkit #Speech #News Fast Delivery