Author: Liu Wei

During this time, I saw a discussion on Reddit, why does NGINX not support hot loading? At first glance, it seems counterintuitive. As the world’s largest web server, does it not support hot loading?Is everyone using nginx -s reload Are the commands wrong? With this question in mind, let’s start this journey of exploration and talk about the story of hot loading and NGINX.

Introduction to NGINX

NGINX is a cross-platform open source web server developed in C language. According to statistics, more than 40% of the top 1,000 websites with the highest traffic in the world are using NGINX to handle massive requests.

What are the advantages of NGINX that make it stand out from the crowd of web servers and maintain high usage?

I think the core reason is that NGINX is inherently good at handling high concurrency and can maintain efficient services while having high concurrent requests. Compared with other contemporary competitors such as Apache, Tomcat, etc., its leading event-driven design and fully asynchronous network I/O processing mechanism, as well as the ultimate memory allocation management and many other excellent designs compress server hardware resources to extreme. Making NGINX synonymous with high-performance web servers.

Of course, there are other reasons as well, such as:

- The highly modular design enables NGINX to have numerous official modules and third-party extension modules with rich functions.

- The most free BSD license agreement makes countless developers willing to contribute their ideas to NGINX.

- Supports hot loading, which ensures that NGINX provides 7x24h uninterrupted services.

About Hot Reload

What do you expect the hot reload function to look like? I personally think that, first of all, the user should be unaware, and realize the dynamic update of the server or upstream while ensuring normal user requests and continuous connections.

So what is the need for hot loading? In today’s cloud-native era, the microservice architecture is prevalent, and more and more application scenarios have more frequent service change requirements. Including reverse proxy domain name online and offline, upstream address change, IP black and white list update, etc., all of which are closely related to hot loading.

So how does NGINX implement hot loading?

The principle of NGINX hot loading

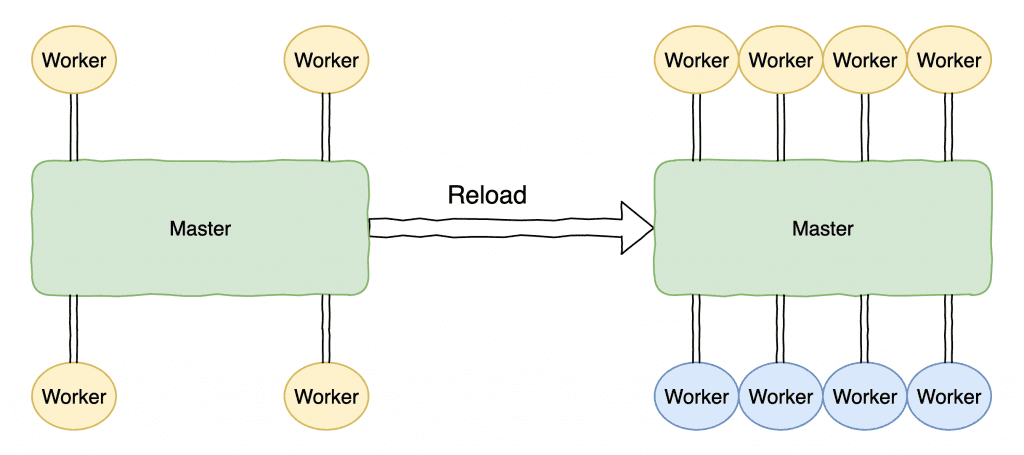

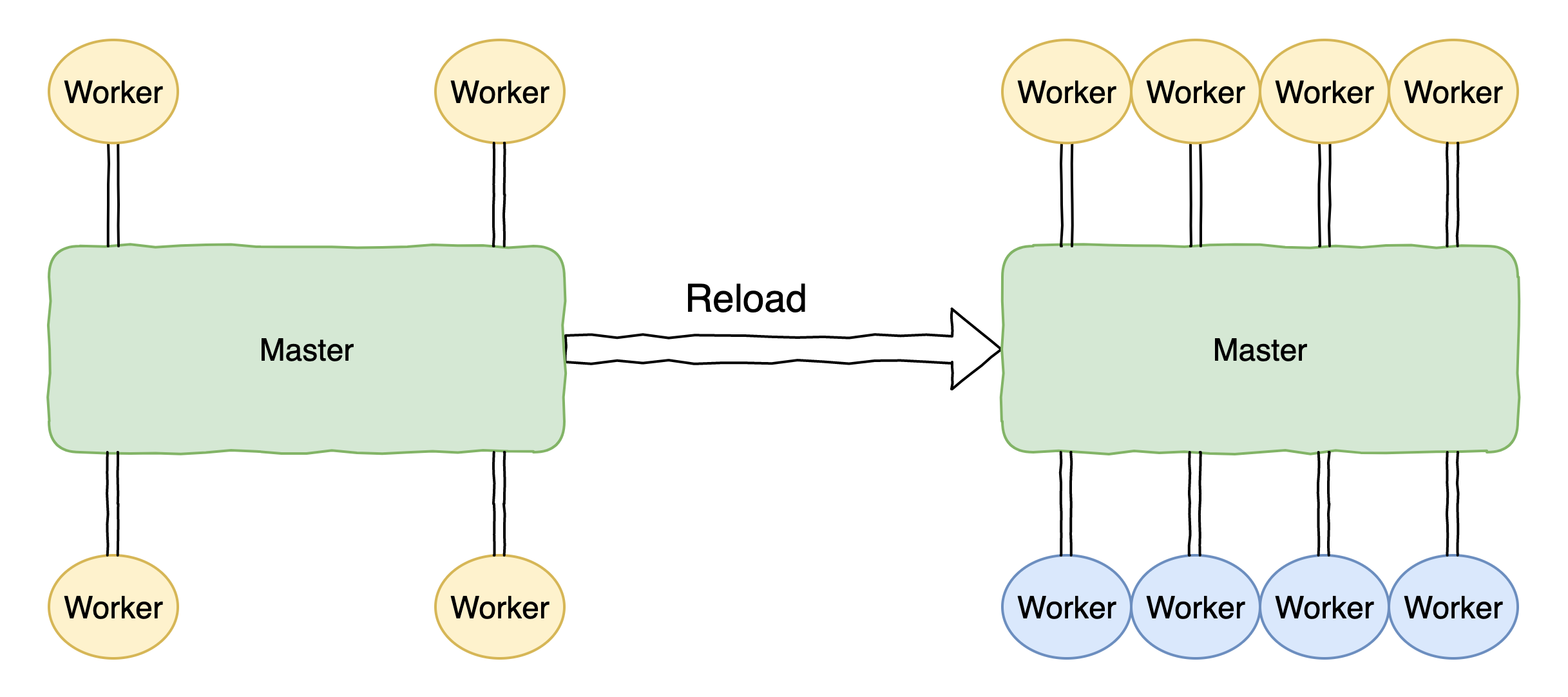

implement nginx -s reload The hot load command is equivalent to sending a HUP signal to the master process of NGINX. After the master process receives the HUP signal, it will open new listening ports in turn, and then start a new worker process.

At this time, there will be two sets of worker processes, the old and the new. After the new worker process starts up, the master will send the QUIT signal to the old worker process for graceful shutdown. After the old worker process receives the QUIT signal, it will first close the listening handle. At this time, the new connection will only flow into the new worker process, and the old worker process will end the process after processing the current connection.

In principle, can NGINX’s hot loading meet our needs well? The answer is probably no, let’s see what are the problems with NGINX’s hot loading.

NGINX hot reload flaws

First of all, frequent hot loading of NGINX will cause unstable connections and increase the possibility of business loss.

When NGINX executes the reload command, it will process existing connections on the old worker process, and will actively disconnect after processing the current request on the connection. At this time, if the client does not handle it well, business may be lost, which is obviously not insensible to the client.

Secondly, in some scenarios, the recovery time of old processes is long, which affects normal business.

For example, when proxying the WebSocket protocol, since NGINX does not parse communication frames, it is impossible to know whether the request has been processed. Even if the worker process receives an exit command from the master, it cannot exit immediately. Instead, it needs to wait until these connections are abnormal, timed out, or one end actively disconnects before exiting normally.

Another example is that when NGINX acts as a reverse proxy for the TCP layer and the UDP layer, it has no way of knowing how many times a request will go through before it is truly ended.

This leads to a particularly long recovery time for old worker processes, especially in industries such as live broadcast, news media, and speech recognition. The recycling time of the old worker process can usually reach half an hour or even longer. If reloading frequently at this time, the shutdown process will continue to increase, and eventually even NGINX OOM will be caused, seriously affecting the business.

# 一直存在旧 worker 进程:

nobody 6246 6241 0 10:51 ? 00:00:00 nginx: worker process

nobody 6247 6241 0 10:51 ? 00:00:00 nginx: worker process

nobody 6247 6241 0 10:51 ? 00:00:00 nginx: worker process

nobody 6248 6241 0 10:51 ? 00:00:00 nginx: worker process

nobody 6249 6241 0 10:51 ? 00:00:00 nginx: worker process

nobody 7995 10419 0 10:30 ? 00:20:37 nginx: worker process is shutting down <= here

nobody 7995 10419 0 10:30 ? 00:20:37 nginx: worker process is shutting down

nobody 7996 10419 0 10:30 ? 00:20:37 nginx: worker process is shutting down

From the above, it can be seen that thenginx -s reloadAlthough the “hot loading” supported by the method is sufficient in previous technical scenarios, it is already stretched and outdated in today’s rapid development of microservices and cloud native. If your business change frequency is weekly or daily, then NGINX reload still meets your needs. But what if the frequency of change is hourly, every minute? Suppose you have 100 NGINX services, if you reload once an hour, you need to reload 2400 times; if you reload once every minute, it is 8.64 million times. This is clearly unacceptable.

Therefore, we need a reload solution that does not require process replacement, and can directly update content and take effect in real time within the existing NGINX process.

Hot loading scheme that takes effect directly in memory

At the beginning of the birth of Apache APISIX, it was hoped to solve the problem of NGINX hot loading.

Apache APISIX is a technology stack based on NGINX + Lua. It is a cloud-native, high-performance, and fully dynamic microservice API gateway implemented with ETCD as the configuration center. It provides load balancing, dynamic upstream, grayscale publishing, refined routing, and traffic limiting. Hundreds of functions such as speed, service degradation, service fuse, identity authentication, observability, etc.

With APISIX, you can update the configuration without restarting the service, which means that you don’t need to restart when modifying upstream, routing, and plugins. Since it is based on NGINX, how does APISIX get rid of the limitation of NGINX to achieve perfect hot update? Let’s look at the architecture of APISIX first.

From the above architecture diagram, we can see that the reason why APISIX can get rid of the limitation of NGINX is because it puts all upstream configurations into APISIX Core and Plugin Runtime for dynamic specification.

Taking routing as an example, NGINX needs to be configured in the configuration file, and each change needs to be reloaded to take effect. In order to achieve dynamic routing configuration, Apache APISIX configures a single server in the NGINX configuration file, and there is only one location in this server. We use this location as the main entry, and all requests will go through this location, and APISIX Core will dynamically specify the specific upstream. Therefore, the routing module of Apache APISIX supports adding, deleting, modifying and deleting routes at runtime, realizing dynamic loading. All these changes have zero perception and no impact on the client.

Here are a few more descriptions of typical scenarios.

For example, to add a reverse proxy for a new domain name, you only need to create an upstream in APISIX and add a new route. The whole process does not require any restart of the NGINX process. Another example is the plug-in system. APISIX can implement the IP blacklist and whitelist function through the ip-restriction plug-in. The update of these capabilities is also dynamic, and there is no need to restart the service. With the help of ETCD in the framework, configuration policies are incrementally pushed in real time, and finally all rules take effect in real time and dynamically, bringing the ultimate experience to users.

Summarize

In some scenarios, the hot loading of NGINX will have two sets of old and new processes for a long time, resulting in additional consumption of resources. At the same time, frequent hot loading will also cause a small probability of business loss. Faced with the current technical trends of cloud native and microservices, services change more frequently, and the strategy for controlling APIs has also changed, leading us to put forward new requirements for hot loading. NGINX hot loading can no longer meet actual business needs. .

Now is the time to switch to Apache APISIX, an API gateway that is more suitable for the cloud-native era and has a more complete hot loading strategy and excellent performance, so as to enjoy the great improvement in management efficiency brought about by dynamic and unified management.

#NGINXs #reload #hot #ApacheAPISIXChinese #Community #News Fast Delivery