With the development of 5G and AI technology, the video industry is ushering in a period of high-speed growth. Video accounted for 43% of network bandwidth in 2019, and is expected to reach 76% by 2025, still maintaining high-speed growth. Among them Most of them are ultra-high-definition videos. According to the prediction of an authoritative organization, the scale of ultra-high-definition video is expected to reach 4 trillion yuan in 2022, which is more than three times the 1.2 trillion yuan in 2019.It can be seen that there is a lot of room for the scale of the UHD market.

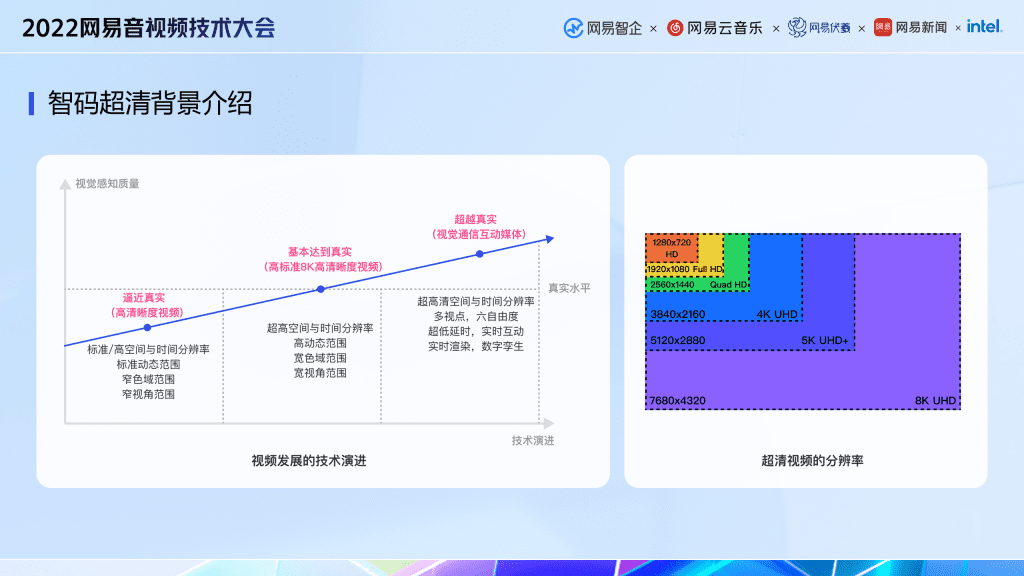

We are currently at the stage of approaching reality and basically reaching the stage of reality. In terms of resolution, we are slowly developing from standard definition and high definition to ultra high definition. From the perspective of dynamic range, color gamut and viewing angle range, from standard dynamic range to high dynamic range, and narrow color gamut, narrow viewing angle to wide The development of color gamut and wide viewing angle,In the future, it will definitely develop towards the direction of interactive media beyond real visual communication, in which more video technologies will emerge,Including higher resolution, as well as multi-view, multi-degree-of-freedom, and technologies such as ultra-low latency, real-time interaction, real-time rendering, and digital twins.

The high-definition video we all understand refers to videos with 720P and 1080P resolutions, and ultra-clear refers to higher resolutions, such as 2K, 4K, 5K, and 8K. As the resolution size becomes larger and larger, network bandwidth transmission The cost of video is getting higher and higher, so a set of algorithms for compressing video with low cost and high quality is needed. Based on the above background, Netease Yunxin has developed a self-developed algorithm of Zhima ultra-clear, which can not only bring the ultimate compression to the video, but also enhance the image quality.

In terms of horizontal comparison, there are many manufacturers that provide smart code ultra-clear services. On the left side of the picture below are domestic manufacturers, including the familiar Alibaba Cloud, Tencent Cloud, Baidu Cloud, etc. On the right side of the picture below are foreign manufacturers, including Amazon, YouTube, Netflix, etc. It can be seen that the smart code ultra-clear service is very important and a very basic tool.

The figure below shows the position and role of video transcoding in the entire live broadcast and on-demand data stream. This figure shows the process of streaming media push and pull data streams in the live and on-demand fields. It can be seen that from the initial video source to the The transcoding server transcodes, and after transcoding, it is packaged and encrypted and sent to different servers. Finally, if the client has a request, we will pull the stream from the nearest server to the client to play and display. Our transcoding is at a relatively advanced position. If the code stream after transcoding is smaller, a series of costs for subsequent network transmission will be greatly reduced, and the quality of the code stream directly affects the subjective experience when playing on the client. Therefore, the transcoding here must be ultra-clear.

The key technical points of Zhima ultra-clear transcoding are divided into three parts. The first block is video pre-processing, the second block is video encoding, and the third block is video post-processing.

Video pre-processing

Video pre-processing includes content analysis and image quality improvement.

The content analysis includes two aspects:

The first is scene recognition:Through scene recognition, different scenes are distinguished, including games, cartoons, action movies, video conferences, etc. For different scenarios, we will select different pre-processing strategies and encoding tools more carefully, so that pre-processing and encoding can be adaptive to the scene.

The second is ROI detection:This refers to the ROI area detection based on deep learning. We pass the detected ROI area to the pre-processing module and the encoding module. In the pre-processing and encoding modules, the image quality of the ROI area is enhanced and repaired.

Image quality improvement includes three parts: video enhancement, color enhancement, and video noise reduction.

Video enhancement and color enhancement are based on the method of deep learning to enhance the original video, which will significantly improve the image quality subjectively.

Video noise reduction is to perform noise reduction processing on noisy videos after noise evaluation, which can not only improve the image quality, but also greatly help the compression rate of video coding, so video noise reduction is very useful. processing tools.

video encoding

Smart Coding:Including perceptual coding, ROI coding, and precise frame-level and row-level code control.

Encoding core aspects:There are self-developed NE264, NE265, and NEVC that supports private protocols.

video post-processing

Mainly to improve the image quality, including video super resolution and video enhancement.

Super-resolution technology of technical analysis of smart code ultra-clear

Super resolution here specifically refers to super resolution, from low resolution to high resolution. The super-resolution algorithm is generally deployed on the terminal side, so it needs to be fast and efficient. Therefore, we have developed a set of real-time super-resolution algorithms based on lightweight networks.

The self-developed lightweight network here is called Yunxin RFDECB network structure. The following figure describes NetEase Yunxin’s RFDECB network structure in detail. The convolution module is composed of different levels of residual features and ECB output re-parameterized structure to better extract image features, and finally obtain high-resolution images through fusion. For the specific structure of the ECB module on the right, we use the Laplacian operator and the Sobel operator to extract the edge features of the image, so as to obtain a better super-resolution effect.

In addition, after the training is over, we will expand and merge the multi-branch network structure in the ECB module, and finally turn it into a very simple convolution, which will greatly improve the efficiency in the reasoning process and engineering implementation.

Our self-developed super-scoring algorithm participated in this year’s CVPR2022 super-scoring competition. In the comprehensive performance track, we surpassed players from Byte, Ali, Bilibili, Huawei, Nanjing University, Tsinghua University, etc., and won the comprehensive performance The champion of the track, do the best level in the industry.

The left side of the figure below shows the effect of our super-resolution technology, the left side shows no super-resolution, and the right side shows our super-resolution algorithm. It can be seen that the globe and text on the left are relatively blurred. After the super-resolution algorithm, the globe and text will be much clearer. This is the image quality enhancement brought by our super-resolution algorithm.

The right side of the figure below is the processing comparison between our self-developed mobile terminal super-resolution and the industry’s super-resolution solutions, both of which perform twice the super-resolution under the same 480P resolution. It can be seen that compared with the 10 milliseconds of the industry’s solution 1, we can reduce it by another 50% to reach a speed of 5 milliseconds. Therefore, we can deploy it on more low-performance mobile terminals, and bring more customers an experience of improved image quality.

Let’s talk about the encoding technology. The first is the human eye perception encoding technology. The left side of the figure below is the basic principle of JND. The smallest detectable error of JND (Just Noticeable Distortion) is to further compress the video by using the visual redundancy of the human eye. It can be seen from this figure that the distortion bit rate RDO curve used inside the encoding is a continuous convex curve, and what our human eyes perceive is not continuous, but stepped. It can be seen from the comparison that if the original RDO curve is replaced with a stepped curve, less code rate can be used under the same distortion.

The traditional JND algorithm is based on the underlying features of the image, including the texture, edge, brightness, and color of the image. Netease Yunxin’s self-developed JND perception coding, in addition to adding high-level feature analysis based on deep learning to the traditional JND algorithm, can identify text, faces, and foregrounds in images, and has other salient features regions, and do different JND formulations for these different features. We apply JND formulas with different characteristics to encoding, which can greatly reduce our bit rate. The implementation of this set of algorithms can bring an average bit rate savings of more than 15%, and in some special scenarios can bring more bit rate savings.

The second is the joint optimization of preprocessing and encoding. Here we mainly talk about ROI encoding. We detect the ROI area based on the pre-processing of deep learning. The ROI area in the figure is the face and text. The module protects the subjective quality of the ROI, not only simply reducing the QP of the ROI area, but also for the text part, we use encoding tools such as transform skip to improve the subjective effect of the text. For the non-ROI area, we reduce the bit rate, which can save a lot of bit rate overall.

The figure below is the kernel optimization of NE-CODEC. We have developed more than 20 innovative coding algorithms, which are distributed in different modules of different coding cores, including GOP-level pre-analysis, frame-level pre-analysis, and prediction, conversion, and quantization. In the GOP-level pre-analysis, we self-developed a set of self-adaptive hierarchical B reference structures, including GOP8, GOP16, and GOP32. An adaptive hierarchical structure is also made for CU TREE.

The pre-analysis at the frame level is mainly the optimization of JND and ROI, which has been mentioned above.

We also have many fast algorithms in the prediction module, including fast selection of multiple reference frames. Also in the conversion module, we have done a deep acceleration of the current DCT module, and we have proposed a fast RDOQ algorithm. In addition, combined with JND, the algorithm of JND in the frequency domain is made. In the quantization part, we propose an SSIM-RDO algorithm, which can guarantee more code rate savings under the same SSIM objective index. Through continuous iterative optimization of these many fast algorithms, a set of relatively stable NE-CODEC core is finally formed.

The right side of the picture above is the comparison test between NE265 and other CODECs. It can be seen that in the Online mode, that is, in the 30fps gear, NE265 is superior to major manufacturers in the industry in terms of VMAF indicators. It can also be seen from this figure that compared with the open source X265, NE265 can save 45% bit rate under the same condition of VMAF.

Under Yunxin NE265 encoding, the video not only has the improvement of subjective experience, but also our VMAF objective index has been raised from 89 to 97 points at the same bit rate.Therefore, both objective indicators and subjective experience have been greatly improved.

The next content to share is the business value of Zhima Ultra Clear. Netease Yunxin’s smart code super clear has been fully launched in the company’s internal Netease media, Netease cloud music live broadcast, Youdao video, and Netease vitality. Taking Netease media as an example, the left side of the picture below is the application of Netease media, which can run 40 times a day. 10,000 minutes of video transcoding, after going online, the bandwidth dropped from 80G per day to 32G per day, saving more than 60% of bandwidth. On the right is NetEase Yunxin’s own transcoding server, which also has more than 100,000 minutes of transcoding time per day.

lower bandwidth

The image below is from Nvidia’s AI video compression. On the left, you can see that the conventional H264 is used for compression, and the size of each frame is about 97KB, which means that we need 97KB of bandwidth for network transmission per frame. On the right side, the size of each frame can be reduced to 0.1KB after Nvidia’s AI video compression, which is quite amazing. The principle is to only transmit the key points of the face on our left image. It is a good idea to reconstruct the key points of the face at the receiving end, which can greatly save bandwidth during the transmission process.I believe that the vivid combination of AI and video coding in the future is also an important direction in the future.

#Netease #Yunxin #Zhicode #ultraclear #transcoding #technology #practice #stable #personal #space #Netease #Yunxin #News Fast Delivery