CodeGeeX is a multi-programming language code generation pretrained model with 13 billion parameters. CodeGeeX is implemented using Huawei MindSpore framework, and there are 192 nodes in Pengcheng Lab “Pengcheng Cloud Brain II” (a total of 1536 domesticAscend 910 AI processor) trained on.

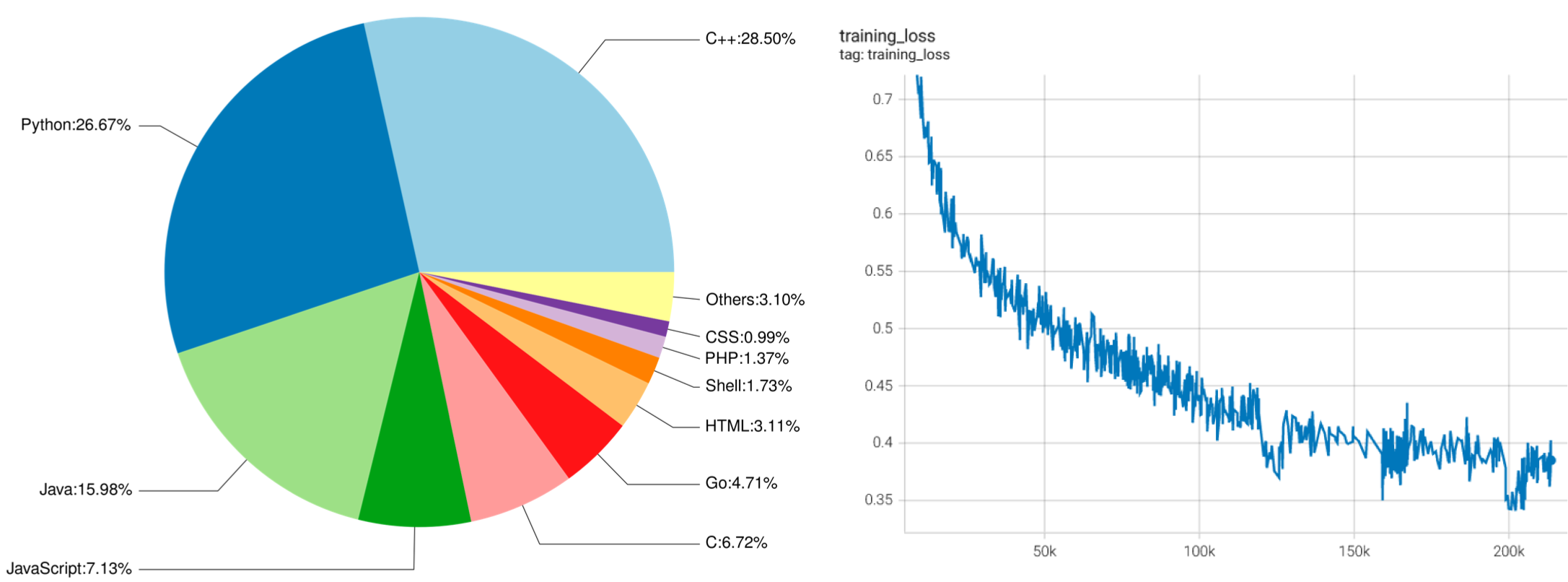

As of June 22, 2022, CodeGeeX is pre-trained on code corpora (>850 billion tokens) in more than 20 programming languages.

CodeGeeX has the following features:

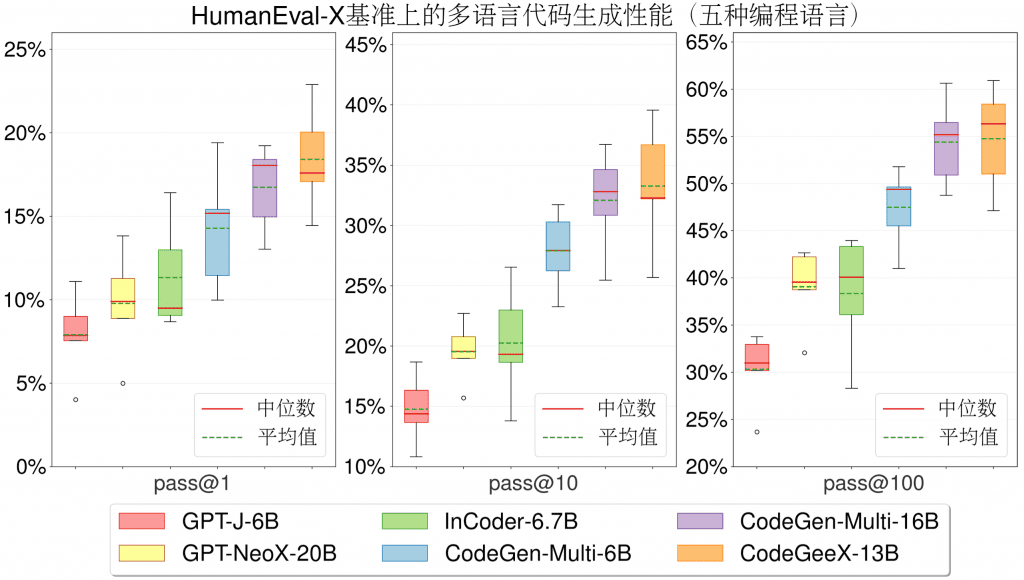

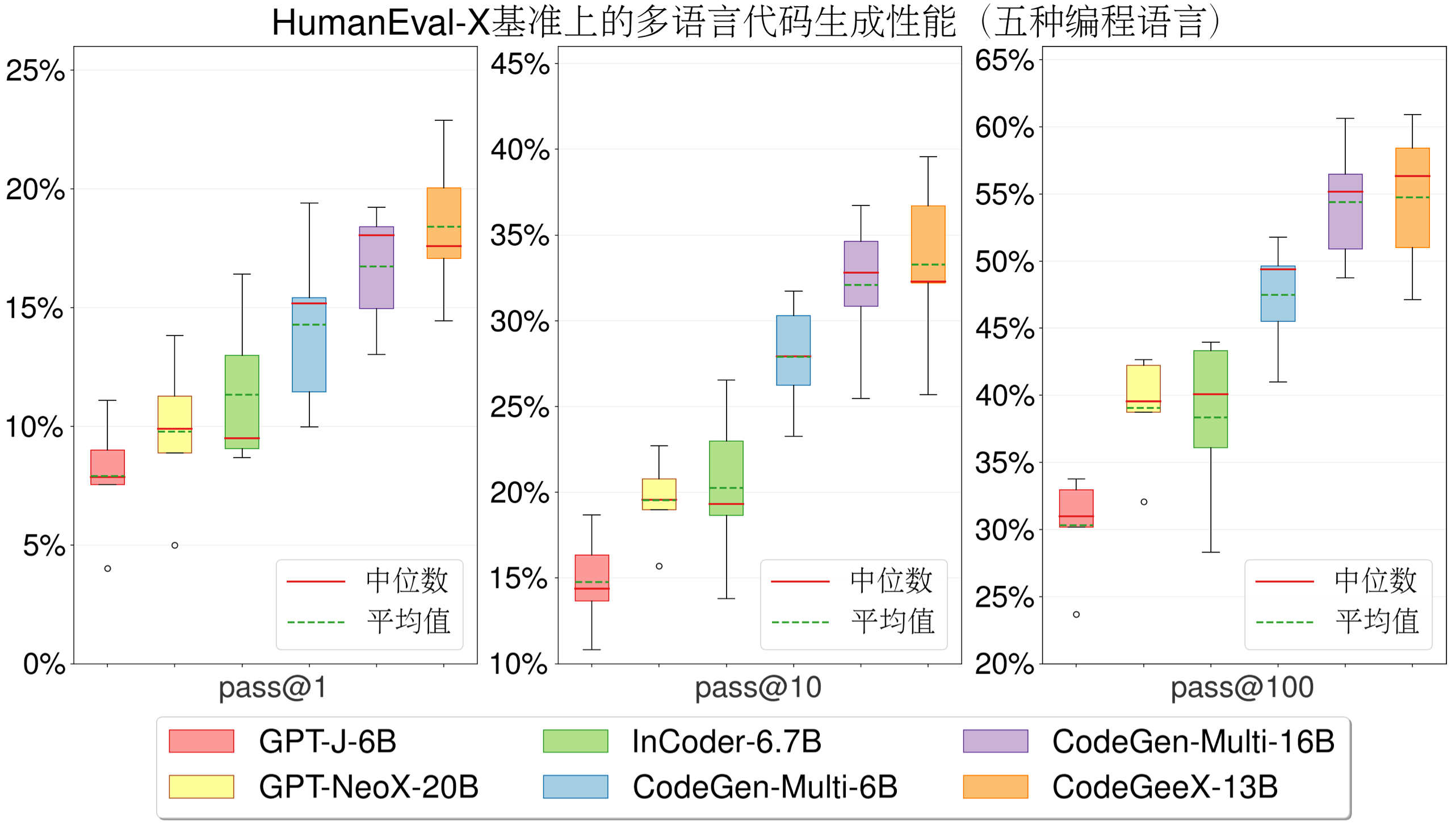

- High precision code generation: Supports the generation of codes in various mainstream programming languages such as Python, C++, Java, JavaScript, and Go, and achieves a solution rate of 47%~60% in the HumanEval-X code generation task, which has better average performance than other open source baseline models.Code Generation Example

- Cross-language code translation: Supports automatic translation and conversion of code fragments between different programming languages, and the translation results have a high accuracy rate, surpassing other baseline models in the HumanEval-X code translation task.Code translation example

- Automatic programming plugin: The CodeGeeX plug-in is now on the VSCode plug-in market (completely free), and users can better assist code writing through its powerful small-sample generation ability, custom code generation style and ability.Plugin download

- Model cross-platform open source: All code and model weights are open source and open for research purposes. CodeGeeX supports both Ascend and NVIDIA platforms, and can implement inference on a single Ascend 910 or NVIDIA V100/A100.Apply for model weights

The new multi-programming language benchmark HumanEval-X: HumanEval-X is the first multi-language, multi-task benchmark that supports functional correctness evaluation. It contains 820 hand-written high-quality code generation questions, test cases and reference answers, covering 5 programming languages (Python, C++, Java, JavaScript, Go), supports the evaluation of code generation and code translation capabilities.how to use

On the HumanEval-X code generation task, CodeGeeX achieves the best average performance compared to other open source baseline models.

user’s guidance

CodeGeeX was initially implemented using the Mindspore framework and trained on the Ascend 910AI chip.In order to adapt to more platforms, the official converts it toMegatron-LMFramework, supports Pytorch+GPU environment.

Install

Requires Python 3.7+ / CUDA 11+ / PyTorch 1.10+ / DeepSpeed 0.6+, install with the following command codegeex:

git clone git@github.com:THUDM/CodeGeeX.git cd CodeGeeX pip install -e .

model weights

passthe linkApply for weights and you will receive a file containing a temporary download linkurls.txts mail.Recommended Usearia2Quickly download with the following command (please ensure that you have enough hard disk space to store the weights (~26GB)):

aria2c -x 16 -s 16 -j 4 --continue=true -i urls.txt

Combine with the following command to get the full weights:

cat codegeex_13b.tar.gz.part.* > codegeex_13b.tar tar xvf codegeex_13b.tar.gz

Inference with GPUs

Try generating your first program with a CodeGeeX model!First, in the configuration fileconfigs/codegeex_13b.shThe path to store the weight is written in.Second, write the hint (which can be an arbitrary description or code snippet) to a filetests/test_prompt.txtrun the following script to start inference (the GPU serial number needs to be specified):

bash ./scripts/test_inference.sh <GPU_ID> ./tests/test_prompt.txt

VS Code plugin usage guide

Developed a free VS Code plug-in based on CodeGeeX, search for “codegeex” in the application market or throughthe linkInstall.The detailed usage guide is inCodeGeeX plugin usage guide.

Left:The proportion of each programming language in the CodeGeeX training data. Right:The CodeGeeX training loss function decreases with the number of training steps.

#CodeGeeX #Homepage #Documentation #Downloads #Multilingual #Code #Generation #Model #News Fast Delivery