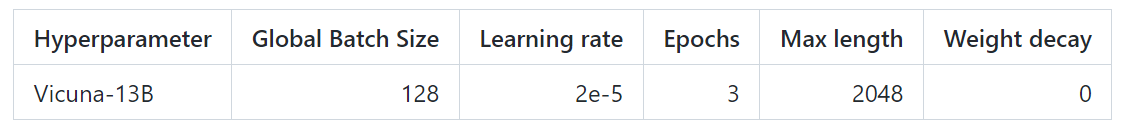

Vicuna is an open-source chatbot created by fine-tuning the LLaMA base model with approximately 70K user-shared conversations collected from ShareGPT.com using public APIs.

onlinedemo

To ensure data quality, the development team converted HTML back to markdown and filtered out some inappropriate or low-quality samples.As well as splitting lengthy dialogues into smaller parts to fit the model’s maximum context length.Its training method is based on Stanford Alpaca based on the following improvements:

- Multiple rounds of dialogue:Adjust the training loss to account for multiple rounds of dialogue, and compute the fine-tuning loss based only on the output of the chatbot.

- Reduce costs with Spot Instances:The 40 times larger dataset and 4 times the length of the training sequence pose considerable challenges to the training cost. Vicuna team uses SkyPilot managed spot to reduce costs by taking advantage of cheaper spot instances with auto-recovery preemption and auto-region switching. This solution cuts the training cost of the 7B model from about $500 to about $140 and the 13B model from about $1000 to $300.

#Vicuna #Homepage #Documentation #Downloads #chatbot #reaches #ChatGPTBard #level #News Fast Delivery