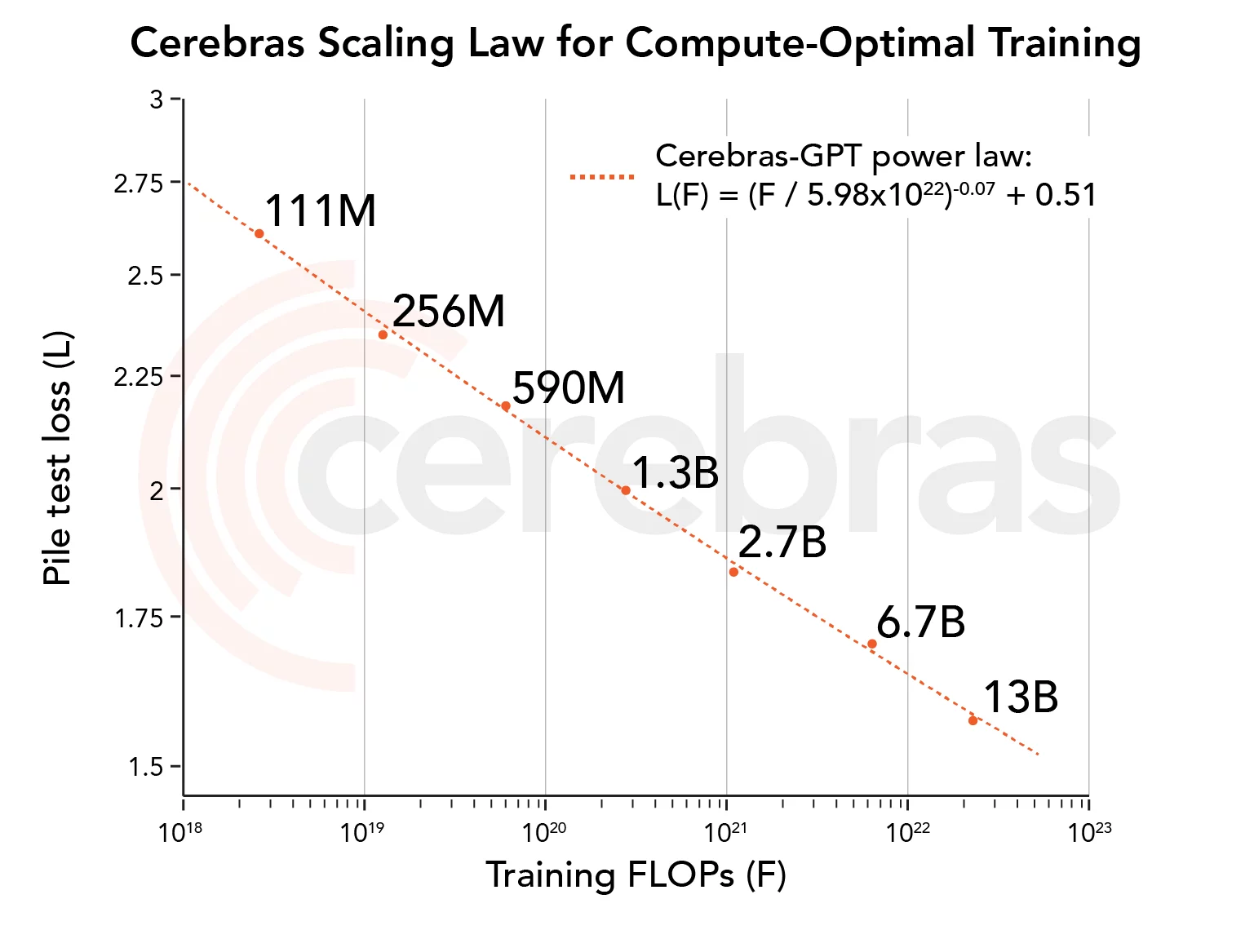

Cerebras GPT is a pre-trained large model in the field of natural language processing open sourced by Cerebras. Its model parameters range from a minimum of 111 million to a maximum of 13 billion, with a total of 7 models.

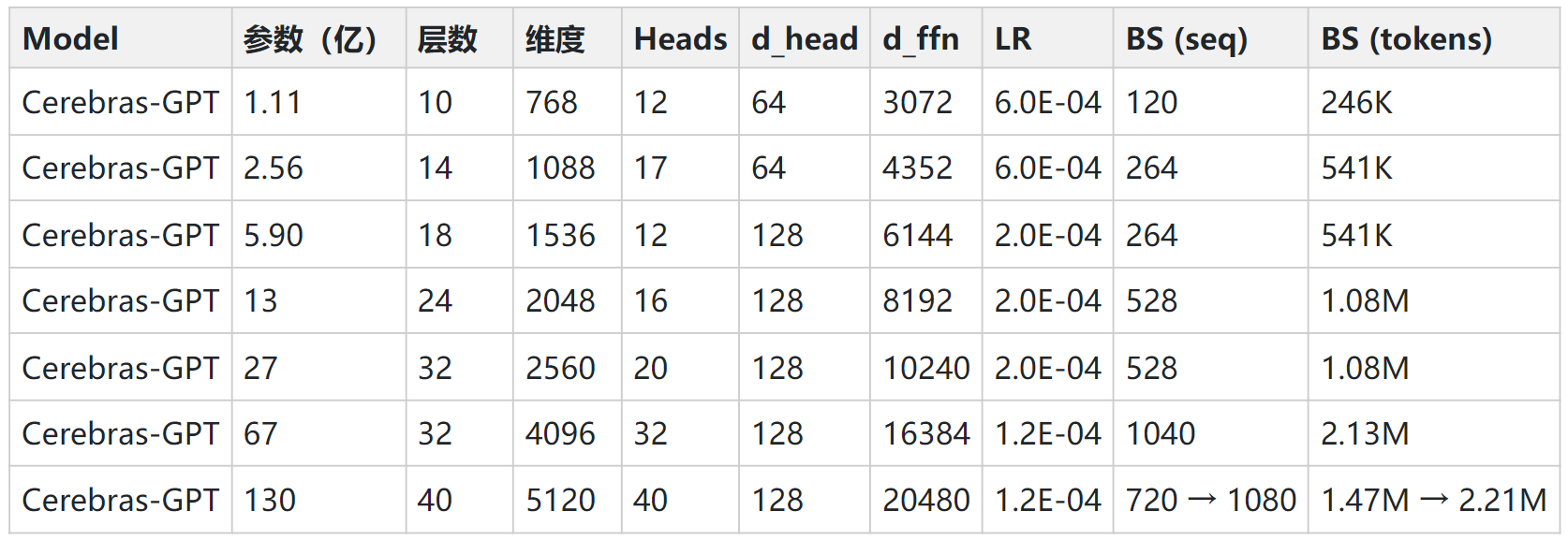

Compared with the industry’s models, Cerebras-GPT is completely open in almost all aspects without any restrictions. Both the model architecture and the pre-training results are public. The current open source model structure and specific training details are as follows:

#CerebrasGPT #Homepage #Documentation #Downloads #Large #Model #Natural #Language #Processing #News Fast Delivery