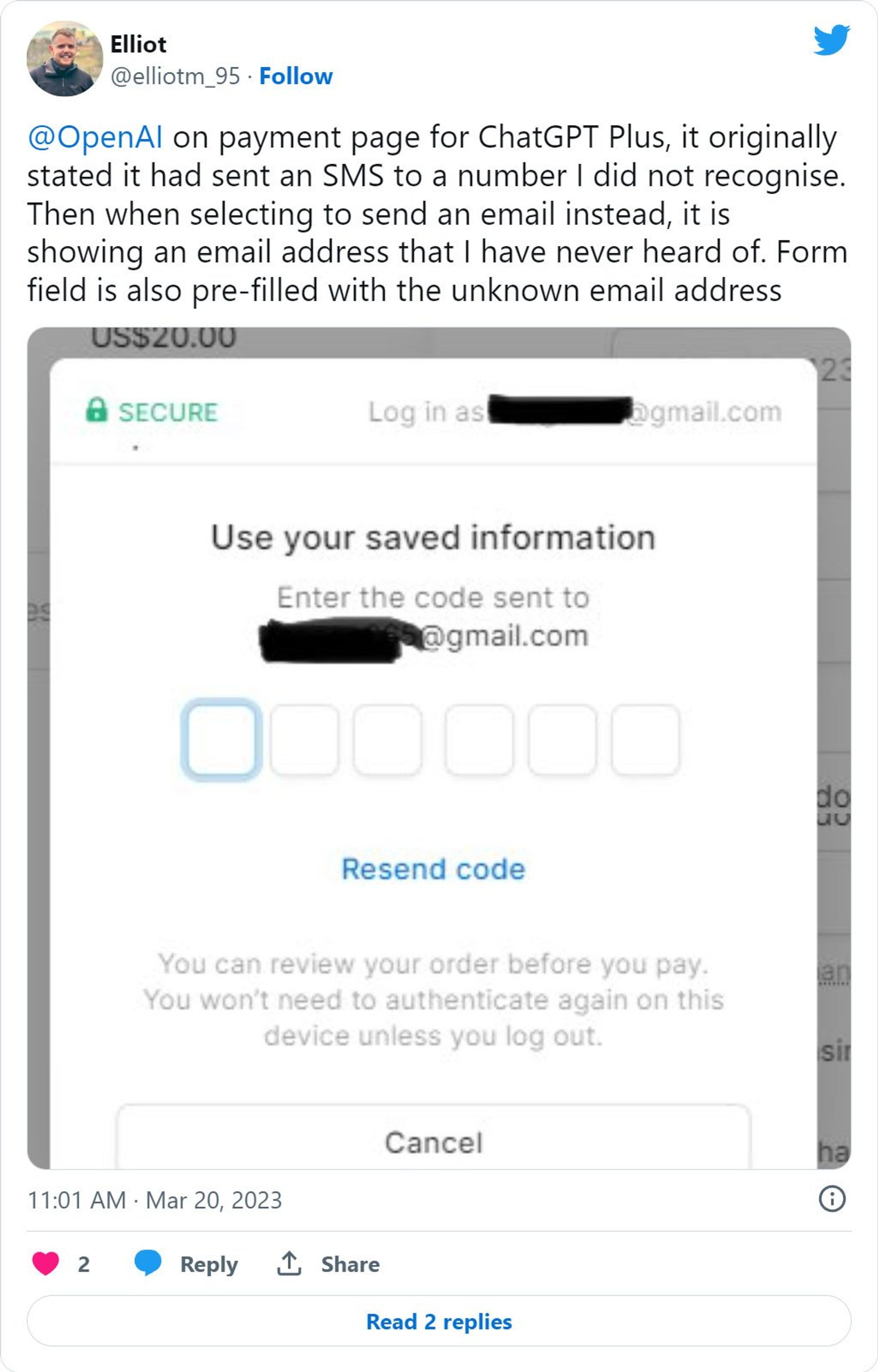

On Monday, ChatGPT suffered a user data leak, and many ChatGPT users saw other people’s conversation records in their historical conversations. Not only is the history of the conversation, but many ChatGPT Plus users have also sent screenshots on platforms such as Reddit and Twitter, saying that they have seen other people’s email addresses on their subscription pages.

After the incident, OpenAI temporarily shut down the ChatGPT service to investigate the problem, followed by Open AI CEO Sam Altman also personally senttweetsadmitted that they did encounter a major problem, but did not announce the details of the problem at the time, only saying that it was caused by an error in an open source library.

We had a major issue in ChatGPT due to a bug in an open source library, and now a fix has been released and we’ve just completed verification.

A small percentage of users are able to see the headings of other users’ conversation histories.

After many days of investigation, OpenAI has released an incident report with technical details. The incident was caused by a bug in the open source library of the Redis client, which caused the ChatGPT service to expose other users’ chat query history and about 1.2% Personal information of ChatGPT Plus users.

technical details

This bug was discovered in the Redis client open source library redis-py. After discovering this bug, OpenAI immediately contacted the maintainers of Redis and provided a patch to solve this problem. Here are the specific details of this error:

- OpenAI uses Redis to cache user information in their servers, so ChatGPT doesn’t need to check the database for every request.

- OpenAI uses Redis Cluster to distribute this load across multiple Redis instances.

- OpenAI uses the redis-py library to allow a Python server using Asyncio to interface with Redis.

- The library maintains a shared pool of connections between the server and the cluster, and recycles connections when done to use for another request.

- When using Asyncio, redis-py’s requests and responses behave as two queues: the caller pushes the request onto the incoming queue, pops the response off the outgoing queue, and returns the connection to the pool.

- If the request is canceled after the request has been pushed to the incoming queue, but before the response is popped from the outgoing queue, we see an error: the connection is thus destroyed, and the next response dequeued for an unrelated request can Receive data left on the connection.

- In most cases, this will result in an unrecoverable server error, and the user will have to retry their request.

- But in some cases, the corrupted data happens to match the type of data that the requester was expecting, so the data returned from the cache appears to be valid, even though the data belonged to another user.

- At 1AM PT on Monday, March 20th, OpenAI inadvertently introduced a change to their servers that caused a spike in Redis request cancellations. This somewhat raises the possibility of each connection returning incorrect data.

- This bug only appeared in the Asyncio redis-py client for Redis Cluster and has now been fixed.

After an in-depth investigation, OpenAI found that some users may have seen the names, email addresses, billing addresses, last four digits of credit card numbers, and credit card expiration dates of other active users. OpenAI emphasized that the full credit card number was not exposed. .

This part of affected users accounts for 1.2% of the total number of ChatGPT Plus users, and they are currently contacting all affected ChatGPT users.

#Redis #error #leads #ChatGPT #data #leak #technical #details #announced #News Fast Delivery