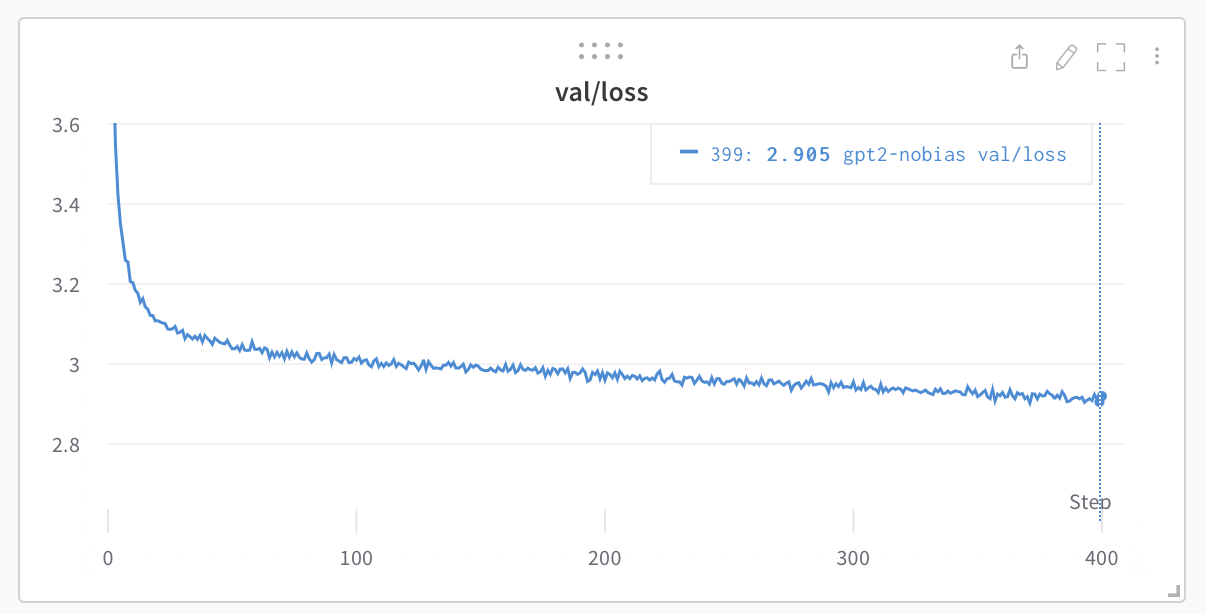

nanoGPT claims to be the easiest and fastest database for training/tuning medium-sized GPT, and is still under active development, but the documentation train.pyGPT-2 was reproduced on OpenWebText (trained for about 4 days on an 8XA100 40GB node).

Because the code is so simple, it’s easy to modify it to suit your needs, train a new model from scratch, or fine-tune a pretrained one.

Install

dependencies:

#nanoGPT #Homepage #Documentation #Downloads #Fast #lightweight #mediumsized #GPT #project #News Fast Delivery