- Add time retrospective function

The so-called backtracking function is to increase the cache of the video stream on the server. You can control how long the cache lasts by configuring the buffertime under publish. For example, if you can cache 7 seconds of video, then the server will cache at least 7 seconds of audio and video data. When we need to subscribe to data before 7 seconds, we can add ?mode=2 after the subscription parameter to start subscribing from 7 seconds ago.

Enabling this feature will greatly increase memory consumption and should only be used in special occasions

- Subscription Mode Parameters

The subscription mode is divided into 0 and real-time mode, which will automatically catch up. 1. Don’t catch up, prevent the video from jumping. 2. Time retrospective mode, that is, start subscription from the largest cached historical data.

The subscription mode can be specified under the configuration item subscribe, such as submode: 2, and the mode specification for a single subscription stream can also be specified by appending to the subscription address when subscribing. If URL parameters conflict, for example, you need to use the parameter mode, you can customize the parameter name of submodeargname through the configuration file.

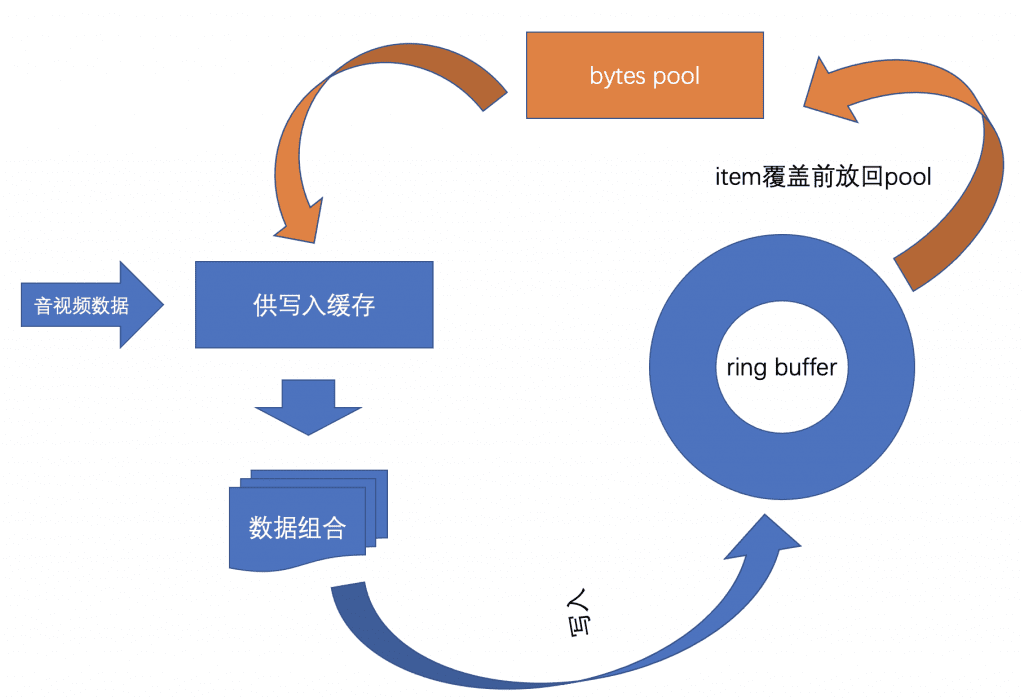

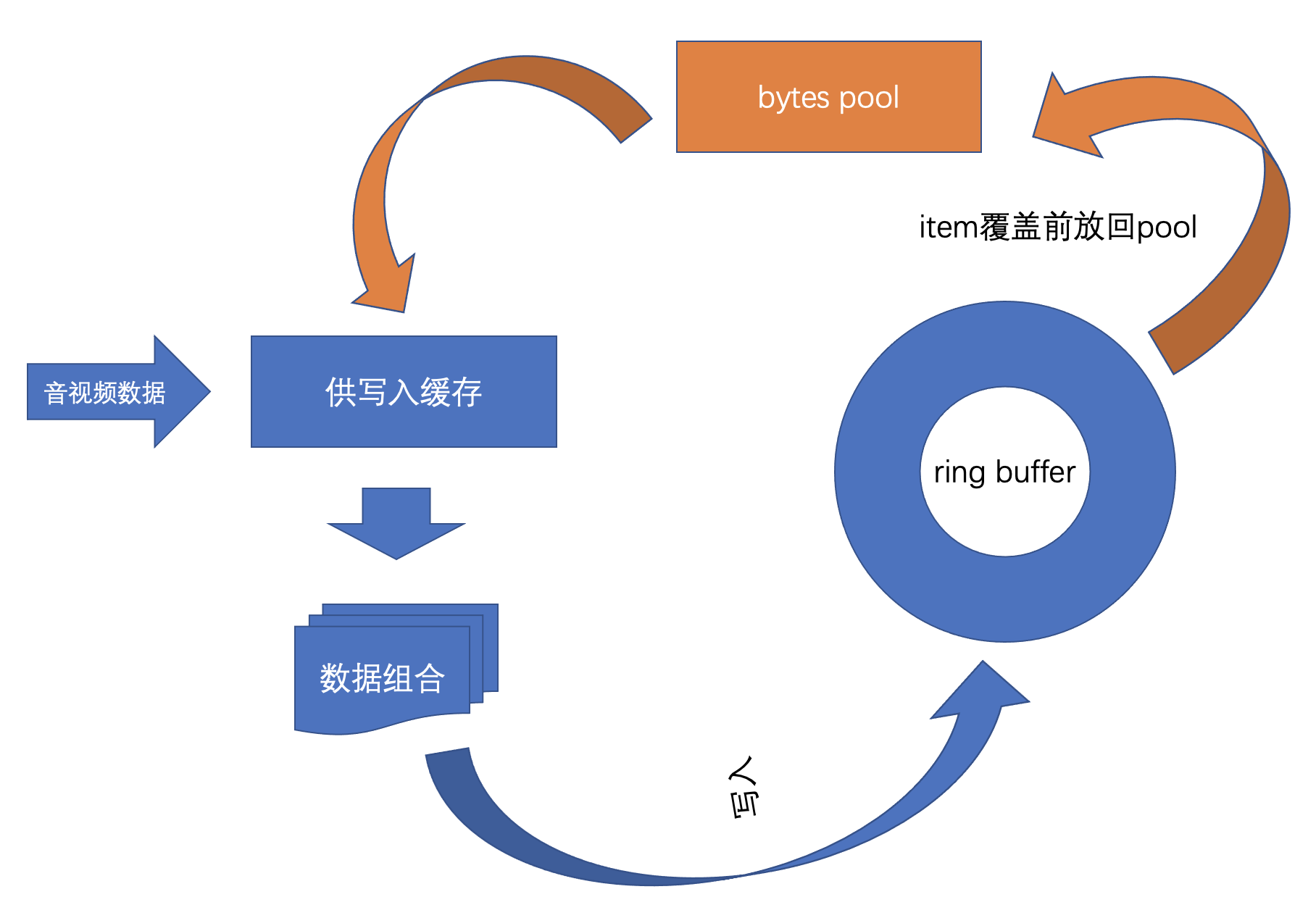

- Memory recovery mechanism

The biggest part of this modification is to modify a large number of data structures, simplify the code, and increase the memory recycling mechanism to reduce gc and achieve higher performance under high concurrency.

will be detailed later

- Increase the configuration format parsing of the length of time

That is, configurations with time units can be configured, such as 10 seconds, which can be configured as 10s.The data structure uses time.Duration to receive

- Solve the problem of no audio when the first screen is rendered

This problem is caused by the poor determination of the buffer length of the audio track after designing the audio and video tracks. In this upgrade, the video track is used to obtain key frames and broadcast to the audio track, so that the audio track can also participate in the rendering of the first screen. in the process of reading. This problem will also lead to the problem that there is no audio data at the beginning of the extrapolation stream, causing the other party to determine that there is no audio track. In addition, it also affects a series of issues such as audio and video timestamps and audio and video synchronization.

- Configuration file format modification

Where time is involved in the configuration file,All uniformly modified into bandsunitform! !

For example delayclosetimeout: 10 Need to be modified to delayclosetimeout: 10s

The time unit can be useds (second) m (minute) ms (millisecond)Wait

- Secondary development plug-in

For the secondary development, some definition modifications need to be adapted this time.

- Where audio and video data is received, the original *AudioFrame and *VideoFrame are changed to AudioFrame and VideoFrame. The structure contains *AVFrame and timestamp information (for the calibrated timestamp)

- The contents of VideoDecConf and AudioDecConf become sequence headers in avcc format. For video tracks, an event that can accept common.ParamaterSets is added. The structure is an array, including SPS, PPS

- The Raw property in the original AVFrame has been changed to AUList, and changed from an array to a linked list.If you need to read its value in a loop, you can use the Range function provided by the linked list

- For the WriteAVCC method in Track, the second item of its input parameter is changed to a linked list structure. This structure can be obtained from BytesPool through Get or GetShell according to the situation.

- The BytesPool attribute is added to Track for memory recovery, which can be passed in when NewXXXTrack, that is, multiple Tracks can share a BytesPoll. But it should be noted that BytesPoll must only be used in the same goroutine (no lock). Different goroutines must create BytesPoll separately.

- The Media structure removes generics

- Deleted ring_av, separated read and write logic of AVRing, added AVRingReader structure

- AVFrame removes generics, audio and video data is changed from an array to a linked list

- Remove the PlayContext structure and replace it with AVRingReader

- Increase the implementation of generic memory pools and generic linked lists for recyclable elements

- The rtmp plug-in code is greatly optimized to reduce memory application

#Monibuca #v440 #release #open #source #language #streaming #media #server #News Fast Delivery